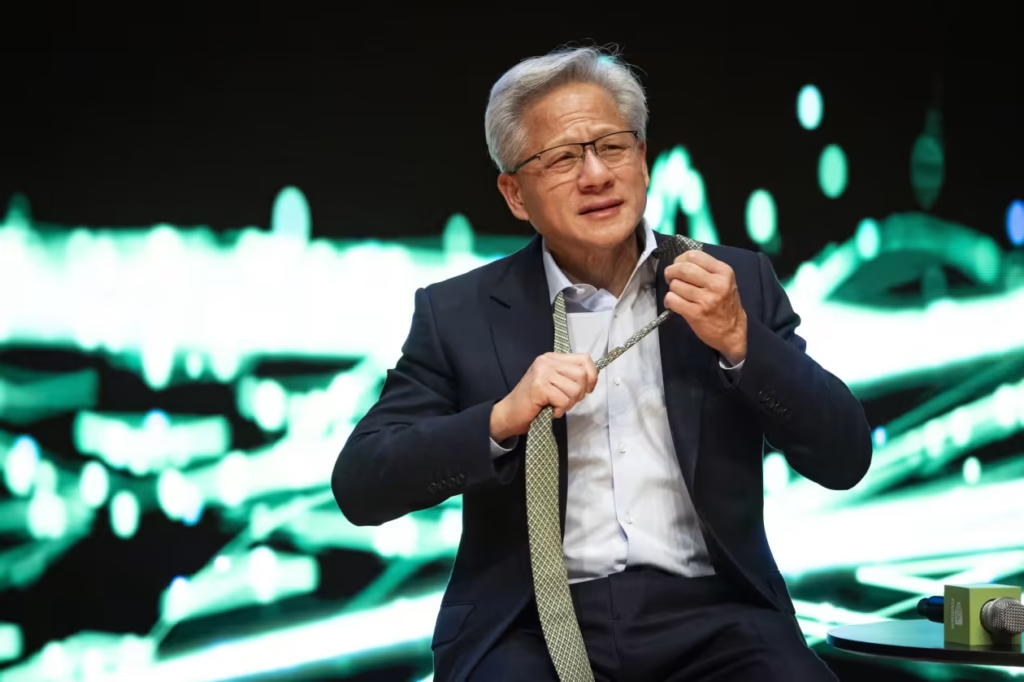

The next GPT model from OpenAI will be crucial in demonstrating how far ahead of its rivals Nvidia, under CEO Jensen Huang, is.

Growing concerns over investment on artificial intelligence and new challenges to Nvidia’s hegemony have put pressure on the company’s shares in recent weeks. Wall Street is confident in what lies ahead as the AI buildout proceeds, but these questions will surely follow the chip maker into the new year.

John Belton, a portfolio manager at Gabelli Funds, told BourseWatch that Nvidia (NVDA) would continue to battle the pessimistic narrative that has arisen from the pessimism surrounding AI spending going into next year.

Although it has somewhat recovered, the stock was still 8.6% below the record through Tuesday after dropping as much as 17.4% last week from its record close of $207.04 on October 29. Although the stock has increased 40.9% in 2025, this is a significant slowdown from the 171.2% run up last year and is less than the 44.3% SOX rise of the PHLX Semiconductor Index.

However, Jed Ellerbroek, a portfolio manager at Argent Capital Management, told BourseWatch that the market is still experiencing a high demand for computing power. He claimed that because Nvidia is one of a small number of firms that provide the majority of the industry, its supply chain has grown dramatically in recent years.

Ellerbroek stated that he anticipates that demand for compute would continue to outpace supply in the upcoming year. Furthermore, he doesn’t yet see a concerning AI bubble emerging.

Ellerbroek cited supply chain difficulties as one of the causes.

“Obviously power in the U.S. is in a shortage, and memory is now also in a shortage,” he stated. “The bounce in those stocks over the last couple of months has just been incredible.” “I don’t see shortages in these sectors turning into excess in the near future,” he continued.

Taiwan Semiconductor Manufacturing Company (TW:2330), which produces the majority of the world’s cutting-edge chips, is cautiously increasing capacity, which is another reason he doesn’t see a bubble. According to Ellerbroek, this implies that the business will maintain its price power, which also gives clients like Nvidia and Apple (AAPL) pricing leverage.

According to Belton, the memory market’s supply and demand imbalance will probably persist for some time to come, although he anticipates improvements beginning in the second half of next year. According to him, one encouraging indication from Nvidia’s November earnings release is that gross margins are expected to be in the mid-seventies % level in the upcoming year.

According to Belton, Nvidia has been planning for a competitive memory market and has secured favorable pricing, as evidenced by its comfort level with that gross-margin range.

Ellerbroek is eager to see what new AI models can do once they have been trained on Nvidia’s next Rubin chips and its most recent Blackwell chips. Ellerbroek stated that the next iteration of OpenAI’s GPT big language model is anticipated to be released in the first few months of the next year and will probably be one of the first Blackwell-trained AI models available.

“We need to see a big step up in the quality of these models and what they can do and how they can be rolled into products and impact businesses and consumers,” Ellerbroek stated.

Belton also believes that Nvidia will benefit from OpenAI’s publication of their new GPT model and subsequent performance evaluations.

Belton stated, “A very successful launch of the new OpenAI model could say a lot about where we are in the evolution of AI technology, and whether scaling laws are still intact,” alluding to the principles that demonstrate how an AI system’s performance increases as resources like compute power and training data increase. “Assuming it’s successful, it could point to the lead that Nvidia still has.”

He contrasted the recent release of Google’s Gemini 3 AI model with his anticipated market response to OpenAI’s upcoming GPT model. Google was perceived as a laggard in AI for the majority of the year, but investors were excited by the company’s November Gemini 3 announcement and realized that it was actually not far behind. On a number of benchmarks, the tech giant said that its Gemini 3 model fared better than Anthropic’s Claude Sonnet 4.5 and OpenAI’s prior GPT-5.1 model.

The competitive advantage of Nvidia’s graphics processing units over application-specific integrated circuits, or ASICs, which are specially made to perform particular jobs, was questioned in the market when Gemini 3 was trained on Google’s custom chips co-developed with Broadcom (AVGO).

However, Belton believed that the market’s response to the Gemini 3 was more about Google’s Gemini versus OpenAI’s ChatGPT than it was about Nvidia’s chips competing with ASICs like Google’s TPU.

“I think there was this realization that OpenAI is not running away with this market like many have thought,” Belton stated. “That has implications for OpenAI suppliers which include Nvidia.”

However, Belton stated that because of the challenges involved in establishing a chip program and the degree of system specificity of each company’s chip, he does not believe that bespoke silicon initiatives pose a serious threat to Nvidia’s competitiveness. Because Nvidia’s merchant silicon is versatile, it functions well in a wide range of data centers.

“I think Nvidia is always going to be the first solution for any customer,” Belton stated. “Everything else is going to be for a small number of customers.” He went on to say that, in his opinion, the greatest substitute for the most recent Nvidia processor will always be its predecessor.

Belton stated that the market will be closely monitoring the development of the next Stargate data centers being built by OpenAI in the upcoming months. Belton stated that the market will receive crucial information about the financial dynamics of the AI data center buildout through OpenAI. He stated that an update to OpenAI’s 2026 yearly recurring revenue targets would be one encouraging indicator.

According to the Wall Street Journal, OpenAI hopes to raise up to $100 billion in its upcoming fundraising round, which, if it hits its full aim, could value the business at $830 billion. According to reports, the fundraising should be finished by the end of the first quarter.

According to Belton, “any long-term investor is going to be fine and feel comfortable with any sort of delays or lack thereof” in the buildout of AI infrastructure as long as all of those measures are moving in the correct direction.

He stated that investors should consider if OpenAI will be a helpful ecosystem partner in 2026 or if it is a “albatross in the making and something that’s going to cause some serious overbuilding and stress in the ecosystem for years to come.”

The managing director of Freedom Capital Markets, Paul Meeks, told BourseWatch that he will be keeping a careful eye on the intermittent shipping of Nvidia’s GPUs to China in the upcoming year. Nvidia has informed Chinese clients that it will begin exporting its H200 chips to the nation in February, according to a Reuters report earlier this week.

According to unnamed persons familiar with the situation, Reuters said that the shipments might contain anywhere from 40,000 to 80,000 of the company’s current stockpile of the cutting-edge chips. Compared to the H20 chip Nvidia previously created for the Chinese market in order to comply with U.S. export control regulations, the H200 is significantly more competent and has been used to train strong AI models from businesses like OpenAI and Meta Platforms (META).

“Its sales in 2026 may rise 15% above [Wall] Street expectations,” Meeks wrote in an email to BourseWatch if the chip manufacturer is permitted to send its chips to China with less restrictions.